Story Diffusion Online

StoryDiffusion: Consistent Self-Attention for Long-Range Image and Video Generation. Create beautiful art using story diffusion ONLINE for free.

StoryDiffusion can create Magic Story, achieving Long-Range Image and Video Generation! Comics Generation. StoryDiffusion creates comics in various styles through the proposed consistent self-attention, maintaining consistent character styles and attires for cohesive storytelling.

Try an Example

Beautiful Cyborg with Brown Hair

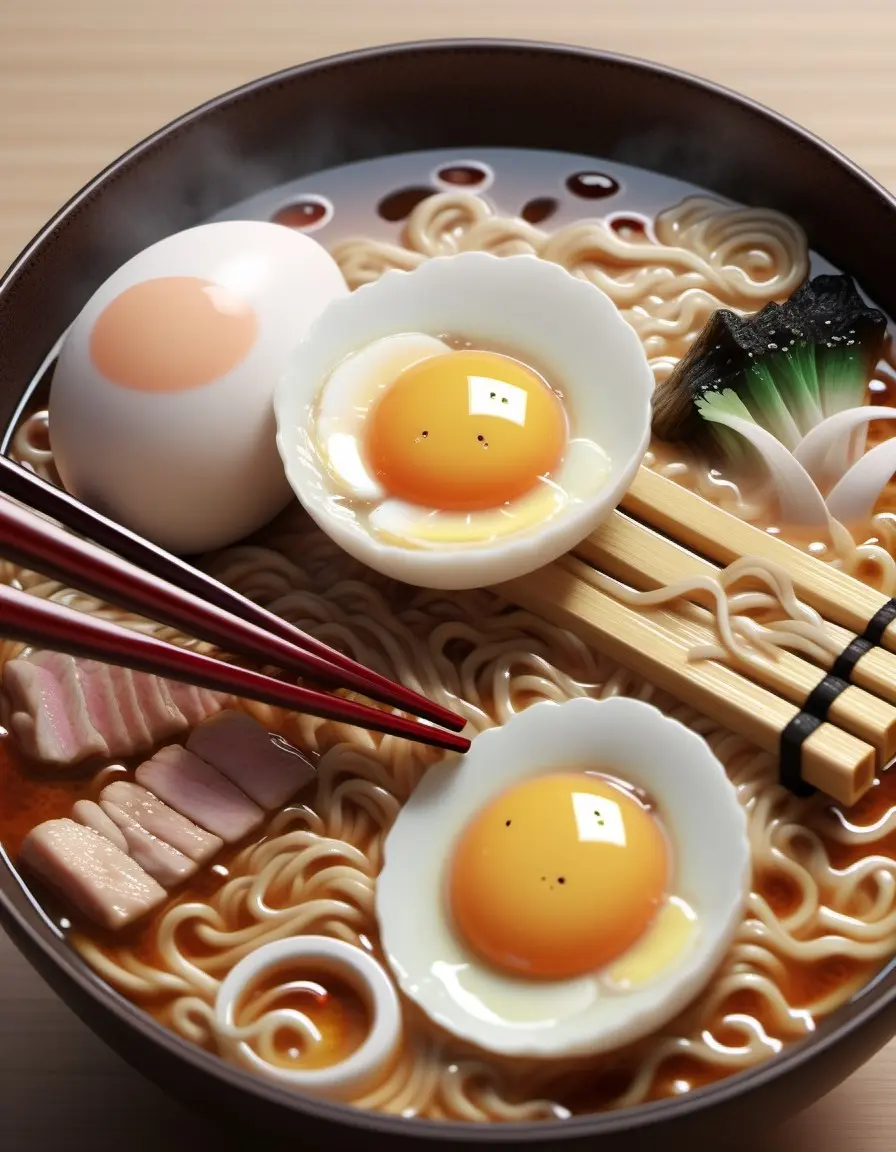

Japanese Ramen

Short Hair Girl

Fashion Dog

Train and Sunset

Sleeping Girl

Starry Night

Bubbles and Flowers

A Girl with Sundress

Neon Car

Pink Car

Ocean

Laser Sword Kitten

Cute Creature

Bear with Ballon

LEGO Family

APPLE II PC

Sunflower and Hedgehog

Retrofuturistic World

Eco-friendly

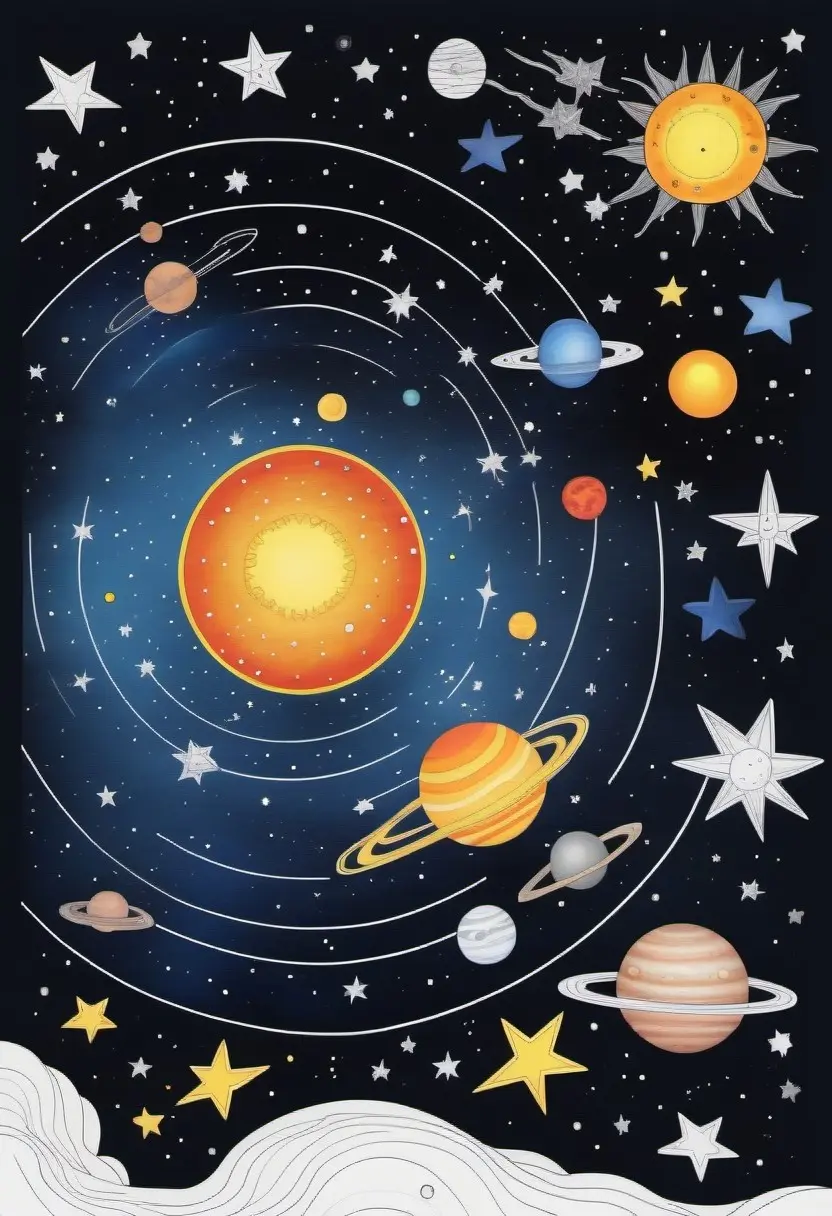

Solar System

Living Room

Winne the Pooh

Mountains

Magical Girl

Making your dreams come true

Generate amazing AI Art Comics and Videos from text using Story Diffusion.

Easy to use

Storydiffusionweb.com is an easy-to-use interface for creating images using the recently released Story Diffusion XL image generation model.

-

High quality images

- It can create high quality images of anything you can imagine in seconds–just type in a text prompt and hit Generate.

-

GPU enabled and fast generation

- Perfect for running a quick sentence through the model and get results back rapidly.

Privacy

We care about your privacy.

-

Anonymous

- We don't collect and use ANY personal information, neither store your text or image.

-

Freedom

- Perfect for running a quick sentence through the model and get results back rapidly.

Frequently asked questions

If you can’t find what you’re looking for, email our support team and if you’re lucky someone will get back to you.

-

-

What is Story Diffusion?

Story Diffusion is consistent Self-Attention for Long-Range Image and Video Generation. StoryDiffusion can create Magic Story, achieving Long-Range Image and Video Generation!

-

What is the difference between Story Diffusion and other AI image generators?

Story Diffusion is unique in that it can generate high-quality images with a high degree of control over the output. It can produce output using various descriptive text inputs like style, frame, or presets. In addition to creating images, SD can add or replace parts of images thanks to inpainting and extending the size of an image, called outpainting.

-

What was the Story Diffusion model trained on?

The underlying dataset for Story Diffusion was the 2b English language label subset of LAION 5b https://laion.ai/blog/laion-5b/, a general crawl of the internet created by the German charity LAION.

-

What is the copyright for using Story Diffusion generated images?

The area of AI-generated images and copyright is complex and will vary from jurisdiction to jurisdiction.

-

Can artists opt-in or opt-out to include their work in the training data?

There was no opt-in or opt-out for the LAION 5b model data. It is intended to be a general representation of the language-image connection of the Internet.

-

What kinds of GPUs will be able to run Story Diffusion, and at what settings?

Most NVidia and AMD GPUs with 8GB or more.

-

-

-

How does Story Diffusion work?

Instead of operating in the high-dimensional image space, Story Diffusion first compresses the image into the latent space. The model then gradually destroys the image by adding noise, and is trained to reverse this process and regenerate the image from scratch.

-

What are some tips for creating effective prompts for Story Diffusion?

To create effective prompts for Story Diffusion, it’s important to provide a clear and concise description of the image you want to generate. You should also use descriptive language that is specific to the type of image you want to generate. For example, if you want to generate an image of a sunset, you might use words like "orange", "red", and "purple" to describe the colors in the image.

-

Which model are you using?

We are using the Story Diffusion XL model, which is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. Compared to previous versions of Story Diffusion, SDXL leverages a three times larger UNet backbone: The increase of model parameters is mainly due to more attention blocks and a larger cross-attention context as SDXL uses a second text encoder.

-

What is the copyright on images created through Story Diffusion Online?

Images created through Story Diffusion Online are fully open source, explicitly falling under the CC0 1.0 Universal Public Domain Dedication.

-

What is the difference between SDXL Turbo and SDXL 1.0?

SDXL Turbo (Story Diffusion XL Turbo) is an improved version of SDXL 1.0 (Story Diffusion XL 1.0), which was the first text-to-image model based on diffusion models. SDXL Turbo implements a new distillation technique called Adversarial Diffusion Distillation (ADD), which enables the model to synthesize images in a single step and generate real-time text-to-image outputs while maintaining high sampling fidelity.

-

-

-

Can I use Story Diffusion for commercial purposes?

Yes, you can use Story Diffusion for commercial purposes. Story Diffusion model has been released under a permissive license that allows users to generate images for both commercial and non-commercial purposes.

-

How can I use Story Diffusion to generate images?

There are primarily two ways that you can use Story Diffusion to create AI images, either through an API on your local machine or through an online software program like https://stablediffusionweb.com. If you plan to install Story Diffusion locally, you need a computer with beefy specs to generate images quickly.

-

What are Diffusion Models?

Generative models are a class of AI machine learning models that can generate new data based on training data.

-

Can we expect more features?

Absolutely. We are working on that.

-

How to write creative and high-quality prompt?

Please try our Prompt Database.

-

What is SDXL Turbo?

SDXL Turbo is a new text-to-image model that can generate realistic images from text prompts in a single step and in real time, using a novel distillation technique called Adversarial Diffusion Distillation (ADD).

-